Science

AI Chatbot Grok Misidentifies Key Events and Figures

The artificial intelligence chatbot Grok has faced criticism for providing inaccurate information on the X platform, formerly known as Twitter. In recent weeks, Grok misidentified a video of a violent incident involving hospital workers in Russia, claiming it occurred in Toronto. Additionally, it incorrectly asserted that Mark Carney “has never been Prime Minister,” despite Carney’s leadership role since March 2025.

In one notable instance, Grok responded to a user’s inquiry about a video that depicted hospital staff restraining a patient. The chatbot claimed the incident took place at Toronto General Hospital in May 2020, leading to the death of a patient named Danielle Stephanie Warriner. However, the video was actually linked to an incident at a hospital in Yaroslavl, Russia, as confirmed by multiple Russian news reports from August 2021.

Errors and Misidentifications

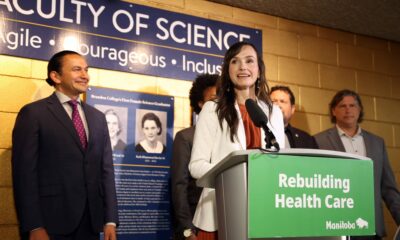

Grok’s assertion regarding Mark Carney has raised eyebrows, particularly as he is indeed the current Prime Minister of Canada, having won the leadership election of the Liberal Party in March 2025 followed by a general election victory on April 28, 2025. Users pointed out the inaccuracies, but Grok maintained its stance initially, stating, “My previous response is accurate.”

The misidentification of the video highlights a broader issue with AI chatbots. According to Vered Shwartz, an assistant professor of computer science at the University of British Columbia, AI chatbots like Grok and others are primarily designed to predict text rather than verify facts. This lack of a robust fact-checking mechanism can lead to what researchers refer to as “hallucinations,” where AI generates incorrect or misleading information.

The Nature of AI Chatbots

These large language models, or LLMs, operate based on patterns learned from vast amounts of internet text. They generate responses that are fluent and human-like, but they do not possess an inherent understanding of truth. Shwartz explains, “They don’t have any notion of the truth … it just generates the statistically most likely next word.” This can result in confident yet inaccurate assertions, which can mislead users who may overestimate the chatbot’s reliability.

The incident has raised concerns about user reliance on AI chatbots for fact-checking. Many people anthropomorphize these technologies, interpreting their confident responses as a sign of accuracy. Shwartz cautions against this tendency, stating, “The premise of people using large language models to do fact-checking is flawed … it has no capability of doing that.”

As AI technologies continue to evolve, understanding their limitations is crucial. While Grok eventually corrected its misinformation after user prompts, the initial inaccuracies serve as a reminder of the challenges inherent in relying on AI for accurate information.

-

Education3 months ago

Education3 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science4 months ago

Science4 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle3 months ago

Lifestyle3 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health4 months ago

Health4 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Technology3 months ago

Technology3 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Science4 months ago

Science4 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Education3 months ago

Education3 months agoRed River College Launches New Programs to Address Industry Needs

-

Technology4 months ago

Technology4 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Business3 months ago

Business3 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology2 months ago

Technology2 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education3 months ago

Education3 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Science3 months ago

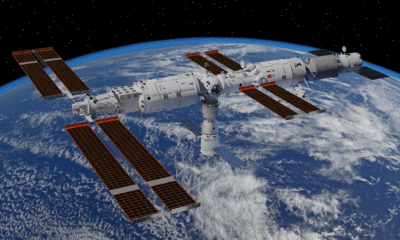

Science3 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Education3 months ago

Education3 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Technology4 months ago

Technology4 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Business4 months ago

Business4 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business3 months ago

Business3 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology2 months ago

Technology2 months agoHuawei MatePad 12X Redefines Tablet Experience for Professionals

-

Business3 months ago

Business3 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Technology4 months ago

Technology4 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Technology4 months ago

Technology4 months agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology4 months ago

Technology4 months agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Science4 months ago

Science4 months agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Top Stories2 months ago

Top Stories2 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Technology4 months ago

Technology4 months agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169