Science

OpenAI Unveils Cyber-Resilience Strategy Amid Security Concerns

OpenAI has introduced a new strategy aimed at enhancing its cyber resilience, a move that follows recent criticisms regarding the security implications of its rapidly advancing artificial intelligence technologies. The announcement came shortly after the company revealed its plans for the next iteration of its model, with GPT-5.2 being announced just weeks after GPT-4. This strategy underscores OpenAI’s commitment to addressing the cybersecurity risks associated with its evolving AI capabilities.

The company is focusing on fortifying its models for defensive cybersecurity tasks and developing tools designed to assist defenders in auditing code and patching vulnerabilities. Despite these efforts, OpenAI has cautioned that its future AI models may present significant cybersecurity risks, including the potential for creating effective zero-day exploits or aiding complex cyber-espionage operations. To mitigate these threats, OpenAI is implementing a defence-in-depth approach, which emphasizes access controls, infrastructure hardening, and continuous monitoring.

As OpenAI seeks to enhance its security measures, questions arise regarding their adequacy. Analysts are particularly concerned about how organizations can evaluate the safety of AI models for deployment in production environments. Furthermore, while OpenAI is investing in security tools for developers, there remains uncertainty regarding the effectiveness of these measures for defenders who do not have control over the underlying code or infrastructure.

To explore these issues further, Digital Journal spoke with Mayank Kumar, Founding AI Engineer at DeepTempo, an AI solution focused on threat detection. Kumar expressed a cautiously optimistic view on OpenAI’s developments, stating, “I welcome progress, especially that of AI and chatbots, which are so widely used, abused, and lacking in oversight.” However, he pointed out that OpenAI’s security initiatives primarily benefit developers who have direct control over the code, potentially leaving vulnerabilities unaddressed.

Kumar emphasized the inherent security limitations of AI systems, noting that the prompt remains a critical vulnerability and a persistent attack interface. He explained, “While these agentic tools help reduce pre-deployment vulnerabilities, any safeguard focused solely on sanitising the input will be brittle.” He further elaborated that the core challenge lies in detecting multi-step, agentic actions that can circumvent prompt filters, particularly in live environments where the AI operates.

The rapid evolution of AI threats has led Kumar to conclude that static safeguards for large language models (LLMs) are at a disadvantage in a fast-paced landscape. He remarked, “Attackers can generate multiple versions of prompts with the same intent to bypass content filters quicker than vendors can patch them.” This speed mismatch, he argues, underscores the inadequacy of front-end prompt refusal as a standalone security measure for enterprises.

Kumar advocates for a shift in defensive strategies, suggesting that organizations should focus on monitoring the actions of AI agents in real time rather than solely blocking input. He recommends that enterprises evaluate AI safety by examining the entire application stack, which includes validating robustness against prompt injection, ensuring alignment with corporate policies, and maintaining comprehensive logging of inputs and actions.

He stressed the importance of enforcing the principle of least privilege on AI agents, which involves strictly limiting their access to tools, APIs, and data. Kumar concluded by stating that the most effective defense strategy includes deploying continuously monitored AI systems, where specialized detection models can analyze agent behavior and flag any anomalous or potentially malicious actions immediately.

As OpenAI navigates these complex challenges, the implications for the broader business community are significant. Organizations must adapt their security frameworks to encompass the rapid advancements in AI technology while ensuring that robust safeguards are in place to protect against emerging threats.

-

Education3 months ago

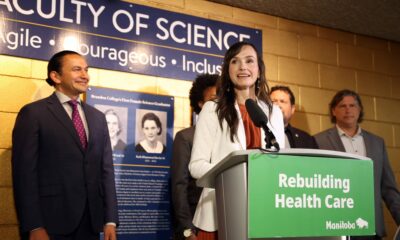

Education3 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science4 months ago

Science4 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle4 months ago

Lifestyle4 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health4 months ago

Health4 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Science4 months ago

Science4 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Technology4 months ago

Technology4 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Education4 months ago

Education4 months agoRed River College Launches New Programs to Address Industry Needs

-

Business3 months ago

Business3 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology4 months ago

Technology4 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Technology2 months ago

Technology2 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education4 months ago

Education4 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Education4 months ago

Education4 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Science4 months ago

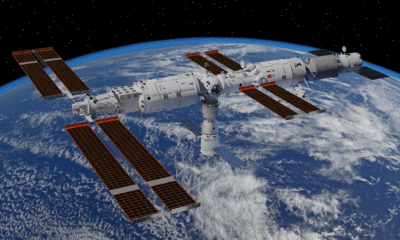

Science4 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Business4 months ago

Business4 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Business4 months ago

Business4 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business4 months ago

Business4 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology4 months ago

Technology4 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Top Stories3 months ago

Top Stories3 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Technology4 months ago

Technology4 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Technology2 months ago

Technology2 months agoHuawei MatePad 12X Redefines Tablet Experience for Professionals

-

Technology4 months ago

Technology4 months agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology4 months ago

Technology4 months agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Science4 months ago

Science4 months agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Technology4 months ago

Technology4 months agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169