Technology

Australia Enforces Age Checks, Apple Adapts to New Regulations

Australia’s new social media law, set to take effect on December 10, 2023, mandates that major platforms prevent users under the age of 16 from creating accounts. This regulation positions Apple at the forefront of a significant compliance challenge, compelling the tech giant to implement age verification mechanisms across its App Store. The law not only targets social media applications but also places the onus of compliance on these platforms operating within Apple’s ecosystem.

The eSafety Commissioner has the authority to impose hefty fines, potentially reaching into the tens of millions of dollars, for non-compliance. As part of the regulatory framework, platforms are expected to utilize age-assurance tools and behavioral indicators rather than relying solely on mandatory identification scans. This expectation has sparked discussions around possible legal challenges and alternative solutions as companies navigate these new requirements.

Apple’s response includes a new compliance toolkit that developers can leverage to align with the law. By doing so, the App Store functions as an intermediary between lawmakers and the applications it regulates, effectively placing Apple in a role of quasi-regulatory authority.

Apple’s Compliance Toolkit: Features and Functions

On December 8, 2023, Apple updated developers on the necessary steps for compliance with the new regulations. The company emphasizes that it is the developers’ responsibility to deactivate accounts held by users under the age of 16 and to block any new sign-ups. Among the key tools Apple is providing is the Declared Age Range API, which allows applications to check and verify the age of users.

This API enables apps to identify individuals under the age of 16 and adjust their functionalities accordingly. For instance, a social media app could restrict account creation for minors while still providing a comprehensive experience for adult users. In addition to the API, Apple suggests developers update their App Store listings to reflect that their services are not available to those under 16 in Australia. These updates include answering new age-rating questions that now encompass age assurance measures and parental control options.

While these tools do not guarantee compliance by themselves, they create a framework that allows developers to integrate age checks more easily within Apple’s platform, rather than developing independent systems.

Implications for Developers and Broader Regulatory Landscape

The implementation of this law poses a significant challenge for social media companies, as they must ensure compliance by identifying and deactivating under-16 accounts. Despite Apple providing the tools, the responsibility for adhering to the law remains firmly with developers. Utilizing Apple’s age-range signals may be more appealing than employing separate identity verification methods, especially for smaller platforms that may lack the resources for extensive compliance measures.

Apple has long promoted its commitment to child safety, emphasizing features like Screen Time and content restrictions. However, legislative bodies often overlook these existing protections. The Australian government has been particularly proactive, with the eSafety Commissioner previously criticizing Apple and other tech firms for inadequate action against child abuse material.

The tools being rolled out, including the Declared Age Range API, reflect a broader trend where Apple positions itself as a facilitator of compliance in a marketplace that increasingly demands accountability from technology companies. This approach aligns with the preferences of Australian lawmakers, who favor age checks based on existing data rather than mandatory document uploads.

As a result, Apple is effectively increasing its influence over how age verification is conducted, leading to concerns that this could establish a baseline standard for compliance that may extend beyond Australia. Developers may face pressure to adopt these tools, not only to comply with Australian regulations but also to align with potential future laws in other jurisdictions.

Australia’s new law underscores the responsibilities that private platforms bear in enforcing compliance, raising concerns that minors could circumvent restrictions using VPNs or false information. While Apple cannot resolve these issues entirely, its proactive measures demonstrate its willingness to serve as an intermediary between legislators and developers.

As the regulatory landscape evolves, Apple’s growing role may prompt further scrutiny regarding its influence over compliance standards. Should regulators accept Apple’s toolkit as a definitive solution, it could pave the way for a model where one platform dictates compliance protocols for social media applications worldwide.

-

Education3 months ago

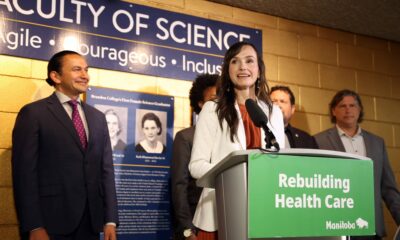

Education3 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science4 months ago

Science4 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle3 months ago

Lifestyle3 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health4 months ago

Health4 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Science4 months ago

Science4 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Technology4 months ago

Technology4 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Education4 months ago

Education4 months agoRed River College Launches New Programs to Address Industry Needs

-

Technology4 months ago

Technology4 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Business3 months ago

Business3 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology2 months ago

Technology2 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education4 months ago

Education4 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Education3 months ago

Education3 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Science4 months ago

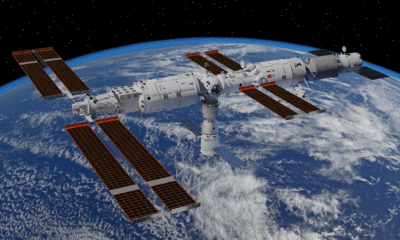

Science4 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Business4 months ago

Business4 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Technology4 months ago

Technology4 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Business4 months ago

Business4 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business4 months ago

Business4 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology4 months ago

Technology4 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Technology2 months ago

Technology2 months agoHuawei MatePad 12X Redefines Tablet Experience for Professionals

-

Technology4 months ago

Technology4 months agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology4 months ago

Technology4 months agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Top Stories3 months ago

Top Stories3 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Science4 months ago

Science4 months agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Technology4 months ago

Technology4 months agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169