Science

Startup Uncovers 50 Hallucinated Citations in Peer-Reviewed Papers

A recent investigation by the startup GPTZero has revealed that 50 peer-reviewed submissions to the International Conference on Learning Representations (ICLR) contained at least one fabricated citation. This finding raises significant concerns about the integrity of academic research in the rapidly evolving field of artificial intelligence (AI).

The authors of this investigation, based in Toronto, employed their tool known as the Hallucination Check on a sample of 300 papers submitted to ICLR. They discovered that 50 of these papers included at least one “obvious” hallucination—citations generated by AI that do not correspond to real sources. These inaccuracies included citations attributed to non-existent authors and misattributed journal articles. Alarmingly, many of these submissions had been reviewed by three to five experts, most of whom failed to detect the inaccuracies.

Alex Cui, co-founder and CTO of GPTZero, expressed surprise at the findings, stating, “We just struck gold but kind of in the wrong way.” He emphasized that without intervention, the affected papers were rated highly enough to be accepted for publication, potentially compromising the quality of scholarly work.

Collaboration with ICLR and Future Steps

Following their findings, GPTZero has been collaborating with ICLR’s program chairs to investigate whether other submissions contain similar hallucinations. Cui noted that they are currently analyzing all submissions to ICLR 2026, which total approximately 20,000 articles, ahead of the acceptance announcement deadline.

Colin Raffel, an associate professor at the University of Toronto and a program chair at ICLR, assured the public that he and his colleagues continue to identify and reject submissions that violate academic integrity policies. This proactive approach aims to ensure that the peer-review process remains rigorous amid the increasing integration of AI in academic fields.

GPTZero, founded by Cui and Edward Tian, began as a web application in December 2022 and quickly attracted 30,000 users. Following its official launch in January 2023, its user base surged to four million by 2024, supported by a substantial $10 million funding round led by Nikhil Basu Trivedi, co-founder of Footwork. The company now boasts around 10 million users, including institutions like Purdue University and UCLA.

Impact of AI on Academic Integrity

The implications of GPTZero’s findings extend beyond ICLR. Blair Attard-Frost, an assistant professor of political science at the University of Alberta and a fellow at the Alberta Machine Intelligence Institute, highlighted the increasing strain on peer-review processes due to the rising number of AI-generated papers. She noted that many academics are already overwhelmed, leading to potential oversights during the review process.

Research into the role of large language models (LLMs) in academia has revealed both promise and pitfalls. A study published in September 2023 in the Yale Journal of Biology and Medicine acknowledged that LLMs like OpenAI’s ChatGPT could effectively identify methodological flaws in journal reviews. However, other studies have pointed out that LLMs often produce inaccurate citations, leading to inflated acceptance rates for submitted papers.

Attard-Frost remarked that the surge in AI-generated content places additional pressure on journals that are already grappling with high submission volumes. She believes that relying solely on AI tools for citation verification could lead to false flags, where legitimate papers are incorrectly identified as AI-generated.

In light of these challenges, she proposed alternative models to mitigate the risk of hallucinated citations, such as a compounding fee submission model. This would allow first authors to submit one paper for free, with increasing fees for subsequent submissions, thereby discouraging lax use of AI.

Cui acknowledged the importance of responsible AI implementation in academia. “Let’s use AI, but then let’s make sure we’re also holding those things it produces up to a higher standard,” he stated. Despite the challenges, he remains optimistic about the potential for tools like those offered by GPTZero to enhance accountability in academic publishing.

As the academic landscape continues to evolve with the integration of AI, the findings of GPTZero serve as a crucial reminder of the need to uphold the standards of scholarly integrity. Addressing these issues will require ongoing dialogue and collaboration among academics, institutions, and technology developers to ensure that the benefits of AI do not compromise the quality of research.

-

Education4 months ago

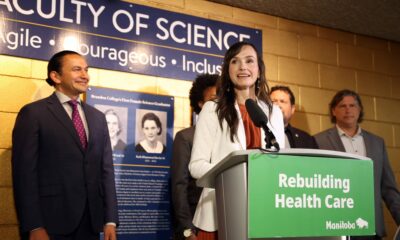

Education4 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science5 months ago

Science5 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle4 months ago

Lifestyle4 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health5 months ago

Health5 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Science5 months ago

Science5 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Technology5 months ago

Technology5 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Education5 months ago

Education5 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Education5 months ago

Education5 months agoRed River College Launches New Programs to Address Industry Needs

-

Business4 months ago

Business4 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology5 months ago

Technology5 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Top Stories4 weeks ago

Top Stories4 weeks agoCanadiens Eye Elias Pettersson: What It Would Cost to Acquire Him

-

Technology3 months ago

Technology3 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education5 months ago

Education5 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Business1 month ago

Business1 month agoEngineAI Unveils T800 Humanoid Robot, Setting New Industry Standards

-

Business5 months ago

Business5 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Science5 months ago

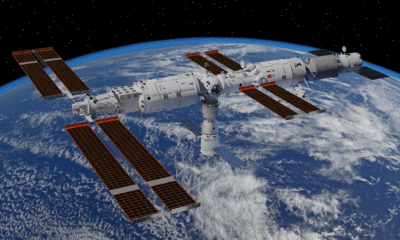

Science5 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Lifestyle3 months ago

Lifestyle3 months agoCanadian Author Secures Funding to Write Book Without Financial Strain

-

Business5 months ago

Business5 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business3 months ago

Business3 months agoHydro-Québec Espionage Trial Exposes Internal Oversight Failures

-

Business5 months ago

Business5 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology5 months ago

Technology5 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Top Stories4 months ago

Top Stories4 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Top Stories3 months ago

Top Stories3 months agoPatrik Laine Struggles to Make Impact for Canadiens Early Season

-

Technology5 months ago

Technology5 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge