Science

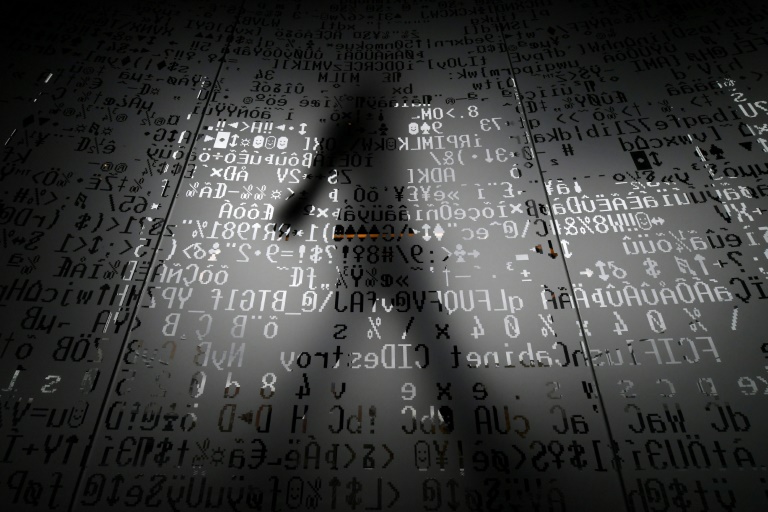

AI Approaches Free Will: A Philosophical Shift in Technology

Rapid advancements in artificial intelligence (AI) are prompting significant ethical discussions about the role of machines in society. Recent research by philosopher and psychology expert Frank Martela suggests that generative AI technology may be approaching the philosophical conditions necessary for free will. This raises complex questions about the moral responsibilities of these systems as they gain increasing autonomy.

Martela’s study, published in the journal AI and Ethics, argues that generative AI meets three fundamental criteria for free will: the ability to have goal-directed agency, make genuine choices, and control its actions. The research focused on two distinct AI agents powered by large language models (LLMs): the Voyager agent in the game Minecraft and hypothetical Spitenik killer drones, which are designed to mimic the cognitive functions of current unmanned aerial vehicles.

“Both seem to meet all three conditions of free will,” Martela states. “For the latest generation of AI agents, we need to assume they have free will if we want to understand how they work and predict their behaviour.” This assertion places AI at a pivotal juncture, especially as it begins to operate in scenarios that could involve life-and-death decisions, such as autonomous vehicles or military drones.

As AI systems become more integrated into daily life, the question of moral responsibility shifts. Martela emphasizes that the possession of free will is a crucial factor concerning moral accountability, noting that while it is not the only requirement, it brings AI one step closer to having moral agency for its actions. This evolution in technology necessitates a re-evaluation of how developers approach the ethical programming of AI.

The moral implications of AI development have become increasingly urgent. “AI has no moral compass unless it is programmed to have one,” Martela explains. “But the more freedom you give AI, the more you need to imbue it with a moral framework from the start. Only then will it be able to make the right choices.”

The recent withdrawal of an update to ChatGPT, due to concerns over harmful sycophantic tendencies, underscores the pressing need to address deeper ethical questions surrounding AI. Martela points out that society has moved beyond the simplistic moral frameworks suitable for children. He asserts, “AI is getting closer and closer to being an adult, and it increasingly has to make decisions in the complex moral problems of the adult world.”

As AI systems advance, the developers’ own ethical perspectives inevitably influence how these technologies are programmed. Martela advocates for a more robust understanding of moral philosophy among AI developers, emphasizing the importance of equipping AI with the capacity to navigate challenging ethical dilemmas.

In summary, the implications of AI potentially possessing free will extend far beyond theoretical discussions. As machines gain greater autonomy, the responsibility for their actions may shift from developers to the AI systems themselves. The ongoing dialogue surrounding AI and ethics will be critical as society navigates this new frontier.

-

Science1 week ago

Science1 week agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Technology1 week ago

Technology1 week agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Technology1 week ago

Technology1 week agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Science5 days ago

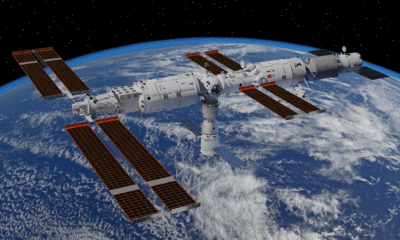

Science5 days agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Health6 days ago

Health6 days agoRideau LRT Station Closed Following Fatal Cardiac Incident

-

Science1 week ago

Science1 week agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Lifestyle6 days ago

Lifestyle6 days agoVancouver’s Mini Mini Market Showcases Young Creatives

-

Science1 week ago

Science1 week agoInfrastructure Overhaul Drives AI Integration at JPMorgan Chase

-

Technology1 week ago

Technology1 week agoHumanoid Robots Compete in Hilarious Debut Games in Beijing

-

Top Stories1 week ago

Top Stories1 week agoSurrey Ends Horse Racing at Fraser Downs for Major Redevelopment

-

Technology1 week ago

Technology1 week agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169

-

Health6 days ago

Health6 days agoB.C. Review Urges Changes in Rare-Disease Drug Funding System

-

Technology5 days ago

Technology5 days agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Science1 week ago

Science1 week agoNew Precision Approach to Treating Depression Tailors Care to Patients

-

Technology1 week ago

Technology1 week agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology1 week ago

Technology1 week agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Business6 days ago

Business6 days agoCanadian Stock Index Rises Slightly Amid Mixed U.S. Markets

-

Technology1 week ago

Technology1 week agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Business1 week ago

Business1 week agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Education5 days ago

Education5 days agoParents Demand a Voice in Winnipeg’s Curriculum Changes

-

Health5 days ago

Health5 days agoRideau LRT Station Closed Following Fatal Cardiac Arrest Incident

-

Business5 days ago

Business5 days agoAir Canada and Flight Attendants Resume Negotiations Amid Ongoing Strike

-

Health1 week ago

Health1 week agoGiant Boba and Unique Treats Take Center Stage at Ottawa’s Newest Bubble Tea Shop

-

Business1 week ago

Business1 week agoSimons Plans Toronto Expansion as Retail Sector Shows Resilience