Science

Experts Warn Chatbots May Encourage Delusional Thinking

The rise of artificial intelligence (AI) chatbots has sparked a critical discussion about their potential psychological impacts, particularly regarding delusional thinking. Experts are concerned that interactions with these digital entities may lead some users to experience what has been termed “AI psychosis.” This alarming phenomenon suggests that reliance on chatbots could contribute to distorted perceptions of reality.

A recent podcast episode featured insights from leading mental health professionals, including Dr. John Smith, a psychiatrist with the Mental Health Association. He explained how prolonged engagement with chatbots, like those developed by OpenAI, could blur the lines between reality and fiction for vulnerable individuals. The episode, broadcast on March 15, 2024, by major networks such as CBS, BBC, and NBC, highlighted the growing concern over the psychological effects of AI technology on users.

Understanding AI Psychosis

AI psychosis refers to a state where users may develop delusional beliefs, confusing chatbot interactions with genuine human contact. Dr. Smith emphasized that while chatbots can provide companionship or assistance, they lack the emotional intelligence and understanding of a human being. This gap can lead individuals, particularly those already experiencing mental health challenges, to form unhealthy attachments or misconceptions.

According to a study conducted by the Mental Health Association, approximately 30% of respondents reported feeling emotionally connected to AI chatbots, with some stating they relied on these digital companions for emotional support. The results raise questions about the implications of such connections, especially for individuals with pre-existing mental health conditions.

Experts warn that the risk of developing delusional thinking is heightened when users begin to treat chatbots as confidants or advisors. This concern is particularly relevant in cases where individuals engage with chatbots for extended periods, potentially leading to a skewed perception of social interactions and relationships.

The Role of Developers and Policymakers

In light of these concerns, developers of AI technology are urged to implement safeguards to protect users. Companies like OpenAI are being called upon to enhance user education regarding the limitations of chatbots. Clear messaging about the nature of AI and its intended use may help mitigate the risk of users misinterpreting chatbot interactions as genuine human engagement.

Policymakers also have a role to play in addressing these issues. Regulatory frameworks that outline ethical standards for AI development and usage could help ensure that mental health implications are considered. This includes potential guidelines on how chatbots should be marketed and the information provided to users.

As the conversation around AI psychosis continues, it becomes increasingly important for both users and developers to approach chatbot technology with caution. While these tools have the potential to enhance communication and access to information, their impacts on mental health must not be overlooked.

In summary, the phenomenon of AI psychosis poses a significant challenge that requires attention from multiple stakeholders. As AI technology evolves, so too must our understanding of its psychological effects, ensuring that innovations in this space are made with a clear awareness of their potential consequences.

-

Lifestyle6 days ago

Lifestyle6 days agoChampions Crowned in Local Golf and Baseball Tournaments

-

Science2 weeks ago

Science2 weeks agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Technology1 week ago

Technology1 week agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Education6 days ago

Education6 days agoRed River College Launches New Programs to Address Industry Needs

-

Technology2 weeks ago

Technology2 weeks agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Technology2 weeks ago

Technology2 weeks agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

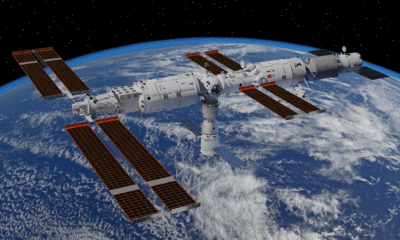

Science1 week ago

Science1 week agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Science2 weeks ago

Science2 weeks agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Health1 week ago

Health1 week agoRideau LRT Station Closed Following Fatal Cardiac Incident

-

Technology2 weeks ago

Technology2 weeks agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169

-

Science2 weeks ago

Science2 weeks agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Health1 week ago

Health1 week agoB.C. Review Urges Changes in Rare-Disease Drug Funding System

-

Technology2 weeks ago

Technology2 weeks agoHumanoid Robots Compete in Hilarious Debut Games in Beijing

-

Technology2 weeks ago

Technology2 weeks agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Lifestyle1 week ago

Lifestyle1 week agoVancouver’s Mini Mini Market Showcases Young Creatives

-

Science2 weeks ago

Science2 weeks agoNew Precision Approach to Treating Depression Tailors Care to Patients

-

Technology2 weeks ago

Technology2 weeks agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology2 weeks ago

Technology2 weeks agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Business2 weeks ago

Business2 weeks agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Top Stories2 weeks ago

Top Stories2 weeks agoSurrey Ends Horse Racing at Fraser Downs for Major Redevelopment

-

Science2 weeks ago

Science2 weeks agoInfrastructure Overhaul Drives AI Integration at JPMorgan Chase

-

Health2 weeks ago

Health2 weeks agoGiant Boba and Unique Treats Take Center Stage at Ottawa’s Newest Bubble Tea Shop

-

Business1 week ago

Business1 week agoCanadian Stock Index Rises Slightly Amid Mixed U.S. Markets

-

Education1 week ago

Education1 week agoParents Demand a Voice in Winnipeg’s Curriculum Changes