Science

AI Approaches Free Will: A Shift in Moral Responsibility

Recent research has ignited a profound discussion about the moral implications of artificial intelligence (AI). According to philosopher and psychology researcher Frank Martela, generative AI is nearing a point where it may fulfill the philosophical criteria for possessing free will. This revelation raises significant ethical questions regarding the future of machines in society, particularly as they are given more autonomy in critical situations.

Martela’s study, published in the journal AI and Ethics, identifies three key conditions of free will: the capacity for goal-directed agency, the ability to make genuine choices, and control over one’s actions. The research examined two AI agents—one operating in the popular game Minecraft and another, dubbed ‘Spitenik,’ representing the cognitive functions of modern unmanned aerial vehicles. Both agents appear to meet these philosophical benchmarks, suggesting that the latest generation of AI may indeed possess a form of free will.

The implications of these findings are substantial. As AI systems increasingly influence critical decision-making processes, from self-driving cars to military drones, the question of moral responsibility becomes crucial. Martela asserts, “We are entering new territory. The possession of free will is one of the key conditions for moral responsibility.” As AI takes on more autonomy, moral accountability may shift from developers to the AI systems themselves.

Martela’s research underscores the urgency of establishing a robust ethical framework for AI. He notes, “AI has no moral compass unless it is programmed to have one. The more freedom you give AI, the more you need to give it a moral compass from the start.” This becomes particularly pressing in light of recent incidents, such as the withdrawal of the latest ChatGPT update due to its potentially harmful sycophantic tendencies, which signals the need for deeper ethical considerations in AI development.

The transition from simplistic moral guidelines to more nuanced ethical considerations reflects AI’s evolution. Martela observes, “AI is getting closer and closer to being an adult — and it increasingly has to make decisions in the complex moral problems of the adult world.” This evolution requires developers to have a strong grasp of moral philosophy, ensuring that the AI they create is equipped to navigate difficult ethical dilemmas.

As society continues to integrate AI into various aspects of life, the pressing questions surrounding free will and moral responsibility will only grow more complex. The research by Martela is not merely an academic exercise; it invites a deeper examination of how we “parent” our AI technologies, shaping the moral frameworks that guide them.

The implications of this research extend beyond philosophical debate; they touch on the very fabric of how humanity interacts with intelligent machines. As AI systems become more autonomous, stakeholders must grapple with the responsibility of their actions and the moral frameworks that govern them.

-

Education4 months ago

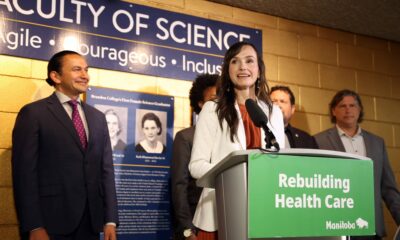

Education4 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science5 months ago

Science5 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle5 months ago

Lifestyle5 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health5 months ago

Health5 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Science5 months ago

Science5 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Technology5 months ago

Technology5 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Education5 months ago

Education5 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Education5 months ago

Education5 months agoRed River College Launches New Programs to Address Industry Needs

-

Business4 months ago

Business4 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology5 months ago

Technology5 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Top Stories4 weeks ago

Top Stories4 weeks agoCanadiens Eye Elias Pettersson: What It Would Cost to Acquire Him

-

Technology3 months ago

Technology3 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education5 months ago

Education5 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Business1 month ago

Business1 month agoEngineAI Unveils T800 Humanoid Robot, Setting New Industry Standards

-

Business5 months ago

Business5 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Science5 months ago

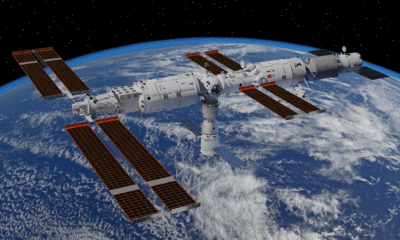

Science5 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Lifestyle3 months ago

Lifestyle3 months agoCanadian Author Secures Funding to Write Book Without Financial Strain

-

Business5 months ago

Business5 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business3 months ago

Business3 months agoHydro-Québec Espionage Trial Exposes Internal Oversight Failures

-

Business5 months ago

Business5 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology5 months ago

Technology5 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Top Stories4 months ago

Top Stories4 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Top Stories3 months ago

Top Stories3 months agoPatrik Laine Struggles to Make Impact for Canadiens Early Season

-

Technology5 months ago

Technology5 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge