Science

Stanford Team Decodes Inner Speech, Raises Privacy Concerns

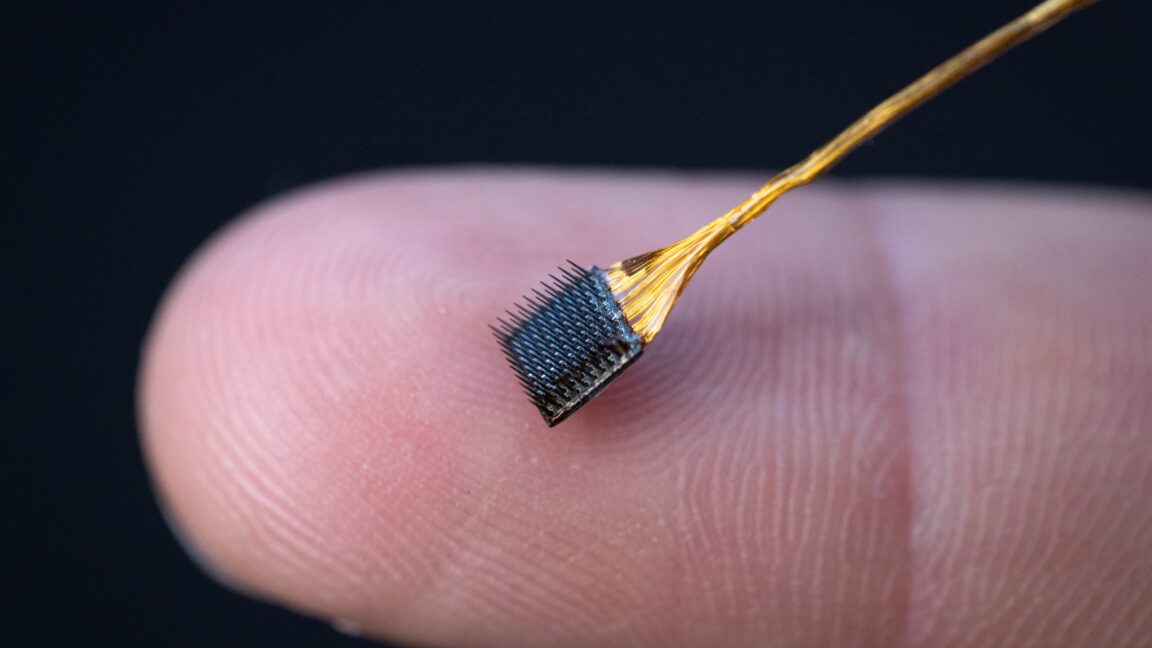

Researchers at Stanford University have developed a groundbreaking brain-computer interface (BCI) capable of decoding inner speech, a significant advancement that raises important questions about mental privacy. This new technology allows individuals with severe paralysis to communicate without physically attempting to speak, offering a potential lifeline for those suffering from conditions like ALS or tetraplegia.

Traditional BCIs have primarily focused on interpreting signals from the brain areas responsible for muscle movements associated with speech. These systems require patients to make physical attempts to speak, a challenging task for individuals with limited mobility. Recognizing this limitation, the Stanford team, led by neuroscientists Benyamin Meschede Abramovich Krasa and Erin M. Kunz, pivoted to decoding inner speech—the silent dialogue that occurs during activities like reading or thinking.

Innovative Approach to Inner Speech Decoding

The research team collected neural data from four participants, each with microelectrode arrays implanted in their motor cortex. The participants engaged in tasks that involved listening to spoken words or silently reading sentences. The analysis revealed that signals associated with inner speech were present in the same brain regions that control attempted speech. This finding led to concerns about whether existing speech decoding systems could unintentionally capture private thoughts.

In a previous study conducted at the University of California—Davis, researchers showcased a BCI that translated brain signals into sounds. When questioned about the ability to distinguish between inner and attempted speech, the lead researcher stated it was not an issue, as their system focused on muscle control signals. Krasa’s team, however, demonstrated that traditional systems could misinterpret inner thoughts, leading to unintended privacy breaches.

To address these concerns, the Stanford team implemented two safeguards. The first method automatically differentiated between signals for attempted and inner speech by training AI systems to ignore inner speech signals. Krasa noted that this approach proved effective. The second safeguard required participants to visualize a designated “mental password” to activate the BCI, achieving an impressive recognition accuracy of 98 percent with the phrase “Chitty chitty bang bang.”

Testing the Limits of Inner Speech Technology

Once the privacy safeguards were established, the researchers began testing the inner speech system using cued words. Participants were shown sentences on a screen and asked to imagine saying them. The results varied, achieving a maximum accuracy of 86 percent with a limited vocabulary of 50 words. However, accuracy dropped to 74 percent when the vocabulary expanded to 125,000 words.

The team then shifted focus to unstructured inner speech tests, where patients were instructed to visualize sequences of arrows on a screen. This task aimed to determine if the BCI could capture thoughts of “up, right, up.” While the system performed slightly above chance levels, it highlighted the current limitations of the technology. More complex tasks, such as recalling favorite foods or movie quotes, resulted in outputs that resembled gibberish.

Despite these challenges, Krasa views the inner speech neural prosthesis as a proof of concept. “We didn’t think this would be possible, but we did it, and that’s exciting,” he stated. Nonetheless, he acknowledged that the error rates remain too high for practical use, suggesting that improvements in hardware and electrode precision may enhance the technology’s effectiveness.

Looking ahead, Krasa’s team is involved in two additional projects stemming from this research. One project aims to determine how much faster an inner speech BCI can operate compared to traditional attempted speech systems. The second project explores the potential benefits of inner speech decoding for individuals with aphasia, a condition that affects speech production despite maintaining motor control.

As research in this field continues, the implications of decoding inner speech are vast, raising both hope for improved communication for those with disabilities and important questions about the ethics of accessing thoughts that many might prefer to keep private.

-

Education3 months ago

Education3 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science4 months ago

Science4 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle3 months ago

Lifestyle3 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health4 months ago

Health4 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Science4 months ago

Science4 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Technology3 months ago

Technology3 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Education3 months ago

Education3 months agoRed River College Launches New Programs to Address Industry Needs

-

Technology4 months ago

Technology4 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Business3 months ago

Business3 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology2 months ago

Technology2 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education3 months ago

Education3 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Science3 months ago

Science3 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Education3 months ago

Education3 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Technology4 months ago

Technology4 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Business4 months ago

Business4 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business3 months ago

Business3 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology2 months ago

Technology2 months agoHuawei MatePad 12X Redefines Tablet Experience for Professionals

-

Business3 months ago

Business3 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Technology4 months ago

Technology4 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Technology4 months ago

Technology4 months agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology4 months ago

Technology4 months agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Science4 months ago

Science4 months agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Top Stories2 months ago

Top Stories2 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Technology4 months ago

Technology4 months agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169