Science

Chatbots Raise Concerns Over Potential for Delusional Thought

Recent discussions among mental health experts and technology analysts have raised alarms about the potential impact of chatbots on users’ mental wellbeing. Concerns center around the phenomenon termed “AI psychosis,” where prolonged interactions with AI-driven chatbots could lead to delusional thinking. This issue was notably highlighted in a podcast featuring insights from reputable sources such as CBS, BBC, and NBC.

The term “AI psychosis” seeks to describe a situation where individuals may begin to confuse the responses from chatbots with reality. As chatbots become increasingly sophisticated, their ability to simulate human conversation raises questions about the psychological effects on users. Experts warn that those engaging with these technologies, particularly individuals already vulnerable to mental health issues, could be at greater risk of developing distorted perceptions of reality.

Understanding the Risks of AI Interaction

In November 2023, a panel of psychologists and AI specialists convened to discuss the implications of chatbot interactions. The consensus among the panelists was that while chatbots can provide valuable support and companionship, there is a significant risk that they could inadvertently reinforce delusional thoughts in susceptible individuals.

Dr. Sarah Mitchell, a clinical psychologist, emphasized the need for caution. “We are entering uncharted territory with these technologies. The lines between actual human interaction and AI-generated responses are blurring,” Dr. Mitchell stated. “For some users, especially those with existing mental health conditions, this could lead to a dangerous cycle of delusion.”

As chatbots gain popularity across various platforms, the potential for misuse or misunderstanding of their capabilities becomes a pressing concern. Many users may not recognize that AI lacks genuine understanding or emotional insight, which could lead to misguided trust in these systems.

The Role of Technology in Modern Psychology

The increasing reliance on technology for mental health support has raised both hopes and concerns. On one hand, chatbots can offer immediate assistance to those in need, providing resources and a sense of companionship. On the other hand, the lack of human oversight in these interactions poses risks that cannot be ignored.

According to a recent study conducted by the Mental Health Technology Group, nearly 30% of individuals who frequently engage with chatbots reported feeling more isolated after their interactions. This disconnect highlights the need for ongoing research into the psychological effects of AI technologies.

Furthermore, the rise of “AI psychosis” is not merely an abstract concern. Experts emphasize that public awareness and education are crucial. Users must be informed about the limitations of chatbots and the importance of seeking professional help when experiencing mental health challenges.

The technology industry also bears responsibility in this matter. Developers are urged to implement safeguards within chatbot frameworks, ensuring that users are aware of the boundaries of AI interaction. Effective regulation and oversight mechanisms could mitigate potential adverse effects on mental health.

As the debate continues, it is clear that while chatbots have the potential to enhance human connection, they also carry risks that warrant serious consideration. The intersection of technology and mental health is becoming increasingly complex, necessitating a collaborative effort among researchers, developers, and mental health professionals to foster safe and effective use of AI in everyday life.

-

Education3 months ago

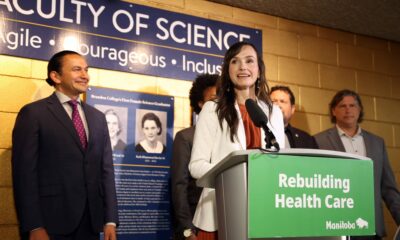

Education3 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science4 months ago

Science4 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle3 months ago

Lifestyle3 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health4 months ago

Health4 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Technology3 months ago

Technology3 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Science4 months ago

Science4 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Education3 months ago

Education3 months agoRed River College Launches New Programs to Address Industry Needs

-

Technology4 months ago

Technology4 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Business3 months ago

Business3 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology2 months ago

Technology2 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education3 months ago

Education3 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Science3 months ago

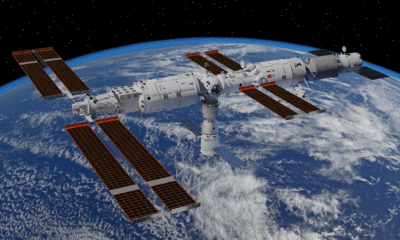

Science3 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Education3 months ago

Education3 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Technology4 months ago

Technology4 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Business4 months ago

Business4 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business3 months ago

Business3 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology2 months ago

Technology2 months agoHuawei MatePad 12X Redefines Tablet Experience for Professionals

-

Business3 months ago

Business3 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Technology4 months ago

Technology4 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Technology4 months ago

Technology4 months agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology4 months ago

Technology4 months agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Science4 months ago

Science4 months agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Top Stories2 months ago

Top Stories2 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Technology4 months ago

Technology4 months agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169