Science

Authentic Content Fight: Niche AI Tools Challenge Tech Giants

As the digital landscape evolves, the integrity of online content is under scrutiny. By 2025, the pressing question remains: can consumers truly trust what they see and read on the internet? This dilemma has ignited a significant battle for content authenticity, pitting established tech giants against emerging niche AI tools. The outcome of this clash could redefine how users perceive and validate information in the age of artificial intelligence.

The Rise of Authenticity Concerns

The adoption of AI technologies has inadvertently created a crisis of authenticity. According to the Stanford AI Index 2024, incidents of synthetic media have surged, leading to widespread concerns about misinformation. This report underscores the dual nature of generative tools—while they offer remarkable capabilities, their misuse raises alarms about trustworthiness. In response, regulators are acting. The EU AI Act aims to introduce transparency requirements for deepfakes and synthetic content, while Spain has proposed fines for unlabelled AI-generated media. These measures push platforms and publishers to establish clear content provenance.

In this evolving landscape, two distinct strategies have emerged. Major tech companies are focusing on provenance—implementing standards that attach verifiable metadata to content at the moment of capture or editing. Initiatives like the Content Authenticity Initiative and C2PA are at the forefront of this effort. For instance, Adobe has reported that over 2,000 members, including camera manufacturers and social platforms, have adopted Content Credentials. YouTube has also begun labeling both synthetic and camera-captured media to enhance transparency.

Conversely, niche AI startups are addressing authenticity challenges through post-hoc detection. These tools analyze text for patterns indicative of AI generation, conduct fact-checking, and refine AI-generated prose to sound more natural. This multifaceted approach is where these smaller entities can make significant impacts.

Shaping the Future of Content Authenticity

Distribution channels play a crucial role in the fight for authentic content. YouTube now mandates that creators flag realistic AI-generated content, particularly in sensitive areas such as news and health. Additionally, Google is integrating authenticity indicators with C2PA metadata, allowing viewers to verify the source of clips. While these measures are not foolproof, they establish a baseline expectation for content authenticity.

The push for authenticity extends into various sectors. In education, instructors and students are increasingly seeking reliable tools for verifying originality and minimizing false positives. Newsroom editors demand rigorous verification for claims, alongside provenance signals for images and videos. Compliance teams are also scrutinizing documents for potential AI-generated text or hidden quotations.

Among the startups leading this charge is JustDone, which specializes in rapid adaptation to technological changes. For example, in late 2024, JustDone launched updated AI detection models calibrated for GPT-4.5 shortly after its release. This agility allows them to address specific needs more promptly than larger competitors. Their “Humanizer” tool was also re-trained early in 2025 to improve the academic tone based on user feedback.

While no AI detection tool can guarantee 100% accuracy, the integration of various solutions can enhance reliability. The Stanford AI Index indicates that even the most robust detection systems struggle with paraphrased or restyled content. Furthermore, a McKinsey survey highlights that many organizations adopt AI technology faster than they establish validation protocols, resulting in significant oversight gaps.

The fundamental lesson is to avoid reliance on a single verification method. Instead, combining provenance credentials, detector confidence scores, and editorial scrutiny can create a more resilient framework. As regulatory expectations rise, publishers must demonstrate that they have taken reasonable steps to prevent disseminating fabricated content.

Universities are also responding to these challenges by revising policies regarding AI assistance and authorship. While tools like Turnitin remain prevalent, educators are now integrating draft histories, plagiarism checks, and multiple detection systems to build stronger cases against potential dishonesty. Similarly, newsrooms are facing heightened pressure to validate their content through robust methods, including the integration of C2PA metadata for images and videos, alongside thorough fact-checking processes.

As the landscape evolves, several trends are emerging. First, the integration of provenance standards is becoming the norm, as more devices and applications adopt C2PA. While this won’t eliminate all misinformation, it provides a competitive edge for trustworthy publishers. Second, AI detection is becoming more specialized, with new tools designed for specific contexts, such as legal documents or academic essays. Finally, governance structures are becoming more formalized, as organizations recognize the need for comprehensive oversight of AI-generated content.

Ultimately, both tech giants and niche AI tools have vital roles to play. While major platforms control distribution and set disclosure standards, smaller startups offer tailored solutions that cater to specific needs. For students, editors, and researchers, the most effective approach in 2025 will involve prioritizing signed media, conducting independent checks, and meticulously documenting each verification step. Authenticity is not merely a filter; it represents a deliberate choice made from the outset.

-

Education3 months ago

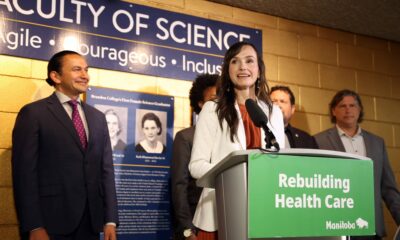

Education3 months agoBrandon University’s Failed $5 Million Project Sparks Oversight Review

-

Science4 months ago

Science4 months agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Lifestyle3 months ago

Lifestyle3 months agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Health4 months ago

Health4 months agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Technology3 months ago

Technology3 months agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Science4 months ago

Science4 months agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Education3 months ago

Education3 months agoRed River College Launches New Programs to Address Industry Needs

-

Technology4 months ago

Technology4 months agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Business3 months ago

Business3 months agoRocket Lab Reports Strong Q2 2025 Revenue Growth and Future Plans

-

Technology2 months ago

Technology2 months agoDiscord Faces Serious Security Breach Affecting Millions

-

Education3 months ago

Education3 months agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Science3 months ago

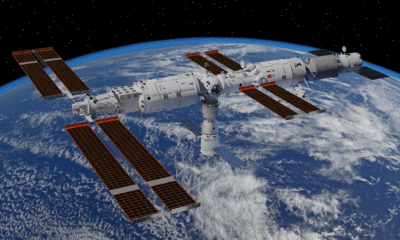

Science3 months agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Education3 months ago

Education3 months agoNew SĆIȺNEW̱ SṮEȽIṮḴEȽ Elementary Opens in Langford for 2025/2026 Year

-

Technology4 months ago

Technology4 months agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Business4 months ago

Business4 months agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Business3 months ago

Business3 months agoDawson City Residents Rally Around Buy Canadian Movement

-

Business3 months ago

Business3 months agoBNA Brewing to Open New Bowling Alley in Downtown Penticton

-

Technology2 months ago

Technology2 months agoHuawei MatePad 12X Redefines Tablet Experience for Professionals

-

Technology4 months ago

Technology4 months agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Technology4 months ago

Technology4 months agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology4 months ago

Technology4 months agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Science4 months ago

Science4 months agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Top Stories2 months ago

Top Stories2 months agoBlue Jays Shift José Berríos to Bullpen Ahead of Playoffs

-

Technology4 months ago

Technology4 months agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169