Lifestyle

Google Partners with StopNCII to Combat Non-Consensual Images

Alphabet Inc.’s Google is set to collaborate with StopNCII, a nonprofit organization dedicated to preventing the spread of non-consensual images online. This partnership represents a notable advance in Google’s efforts to address image-based abuse, a concern that has gained increasing attention in recent years.

StopNCII employs technology that enables victims of image-based abuse to create digital fingerprints, known as hashes, of intimate images. These hashes are shared with partner platforms such as Facebook, Instagram, Reddit, and OnlyFans, which use them to block the reupload of these images without requiring users to report them actively. Google announced this partnership during the NCII summit held at its London office, where it hosted StopNCII’s parent charity, the SWGfL.

For victims, the implications are profound. David Wright, the chief executive officer of SWGfL, emphasized the importance of this initiative, stating, “Knowing that their content doesn’t appear in search — I can’t begin to articulate the impact that this has on individuals.” Although Google will not immediately be listed as an official partner of StopNCII, a spokesperson indicated that the company is currently testing the technology and anticipates implementing it within the next few months.

Delays and Criticism

Google’s slower pace in adopting this technology has faced criticism from advocates and lawmakers. StopNCII launched in late 2021, leveraging detection tools initially developed by Meta. Facebook and Instagram were among the first platforms to adopt these measures, with TikTok and Bumble joining in December 2022. Microsoft integrated the system into its Bing search engine in September 2023, significantly ahead of Google.

In April 2024, Google informed UK lawmakers that it had “policy and practical concerns about the interoperability of the database,” which has hindered its participation until now. Critics argue that the company, given its extensive resources, should take more proactive steps to eliminate non-consensual images without placing the burden on victims to create hashes.

Addressing AI-Generated Content

Some advocates believe that Google’s initiative, while a positive step, does not go far enough. Adam Dodge, founder of the advocacy group End Technology-Enabled Abuse, pointed out that relying on victims to self-report remains a significant issue. He stated, “It’s a step in the right direction. But I think this still puts a burden on victims to self-report.”

Notably absent from Google’s announcement was any mention of AI-generated non-consensual imagery, commonly referred to as deepfakes. The technology employed by StopNCII focuses on known images, which means it cannot preemptively block synthetic or entirely different images. Wright explained, “If it’s a synthetic or an entirely different image, the hash is not going to trap it.”

In 2023, a study by Bloomberg revealed that Google Search was the leading traffic source for websites hosting deepfakes or sexually explicit AI-generated content. Since that time, Google has initiated efforts to reduce and downrank such content in its search results.

As Google moves forward with this partnership, it faces the challenge of addressing both the immediate needs of victims and the evolving landscape of digital abuse, including the rise of AI-generated imagery.

-

Lifestyle3 weeks ago

Lifestyle3 weeks agoWinnipeg Celebrates Culinary Creativity During Le Burger Week 2025

-

Science1 month ago

Science1 month agoMicrosoft Confirms U.S. Law Overrules Canadian Data Sovereignty

-

Education4 weeks ago

Education4 weeks agoRed River College Launches New Programs to Address Industry Needs

-

Technology4 weeks ago

Technology4 weeks agoDragon Ball: Sparking! Zero Launching on Switch and Switch 2 This November

-

Health1 month ago

Health1 month agoMontreal’s Groupe Marcelle Leads Canadian Cosmetic Industry Growth

-

Science1 month ago

Science1 month agoTech Innovator Amandipp Singh Transforms Hiring for Disabled

-

Technology1 month ago

Technology1 month agoGoogle Pixel 10 Pro Fold Specs Unveiled Ahead of Launch

-

Science4 weeks ago

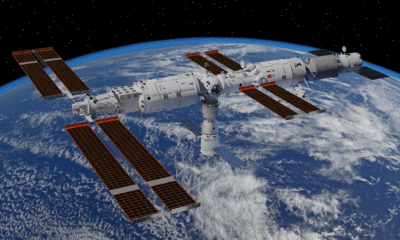

Science4 weeks agoChina’s Wukong Spacesuit Sets New Standard for AI in Space

-

Technology1 month ago

Technology1 month agoWorld of Warcraft Players Buzz Over 19-Quest Bee Challenge

-

Science1 month ago

Science1 month agoXi Labs Innovates with New AI Operating System Set for 2025 Launch

-

Business4 weeks ago

Business4 weeks agoDawson City Residents Rally Around Buy Canadian Movement

-

Technology1 month ago

Technology1 month agoFuture Entertainment Launches DDoD with Gameplay Trailer Showcase

-

Business1 month ago

Business1 month agoNew Estimates Reveal ChatGPT-5 Energy Use Could Soar

-

Technology1 month ago

Technology1 month agoGlobal Launch of Ragnarok M: Classic Set for September 3, 2025

-

Technology1 month ago

Technology1 month agoNew IDR01 Smart Ring Offers Advanced Sports Tracking for $169

-

Technology1 month ago

Technology1 month agoInnovative 140W GaN Travel Adapter Combines Power and Convenience

-

Technology1 month ago

Technology1 month agoHumanoid Robots Compete in Hilarious Debut Games in Beijing

-

Science1 month ago

Science1 month agoNew Precision Approach to Treating Depression Tailors Care to Patients

-

Health1 month ago

Health1 month agoGiant Boba and Unique Treats Take Center Stage at Ottawa’s Newest Bubble Tea Shop

-

Technology1 month ago

Technology1 month agoQuoted Tech Launches Back-to-School Discounts on PCs

-

Technology1 month ago

Technology1 month agoDiscover the Relaxing Charm of Tiny Bookshop: A Cozy Gaming Escape

-

Technology4 weeks ago

Technology4 weeks agoArsanesia Unveils Smith’s Chronicles with Steam Page and Trailer

-

Education4 weeks ago

Education4 weeks agoAlberta Teachers’ Strike: Potential Impacts on Students and Families

-

Technology4 weeks ago

Technology4 weeks agoRaspberry Pi Unveils $40 Touchscreen for Innovative Projects